The (il)logic of legibility – Why governments should stop simplifying complex systems

Sometimes, you learn about an idea that really sticks with you. This happened to me recently when I learnt about “legibility” – a concept which James C Scott introduces in his book Seeing like a State.

Just last week, I was involved in two conversations which highlighted how pervasive the logic of legibility continues to be in influencing how governments think and act. But first, what is legibility?

Defining legibility

Legibility describes the very human tendency to simplify complex systems in order to exert control over them.

In this blog, Venkatesh Rao offers a recipe for legibility:

- Look at a complex and confusing reality…

- Fail to understand all the subtleties of how the complex reality works

- Attribute that failure to the irrationality of what you are looking at, rather than your own limitations

- Come up with an idealized blank-slate vision of what that reality ought to look like

- Argue that the relative simplicity and platonic orderliness of the vision represents rationality

- Use power to impose that vision, by demolishing the old reality if necessary.

Rao explains: “The big mistake in this pattern of failure is projecting your subjective lack of comprehension onto the object you are looking at, as “irrationality.” We make this mistake because we are tempted by a desire for legibility.”

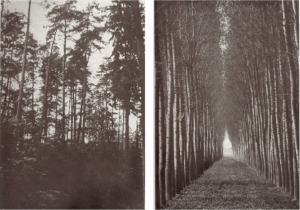

Scott uses modern forestry practices as an example of the practice of legibility. Hundreds of years ago, forests acted as many things – they were places people harvested wood, but also places where locals went foraging and hunting, as well as an ecosystem for animals and plants. According to the logic of scientific forestry practices, forests would be much more valuable if they just produced timber. To achieve this, they had to be made legible.

So, modern agriculturalists decided to clear cut forest, and plant perfectly straight rows of a particular species of fast-growing trees. It was assumed this would be more efficient. Planting just one species meant the quality of timber would be predictable. In addition, the straight rows would make it easy to know exactly how much timber was there, and would mean timber production could be easily monitored and controlled.

For the first generation of trees, the agriculturalists achieved higher yields, and there was much celebration and self-congratulation. But, after about a century, the problems of the ecosystem collapse started to reveal themselves. In imposing a logic of order and control, scientific forestry destroyed the complex, invisible, and unknowable network of relationships between plants, animals and people, which are necessary for a forest to thrive.

After a century it became apparent that relationships between plants and animals were so distorted that pests were destroying crops. The nutrient balance of the soil was disrupted. And after the first generation of trees, the forest was not thriving at all.

Scott uses this example (and many others!) to highlight the perversity of (governments) imposing legibility on systems which, by their nature, cannot be made legible. As mentioned earlier, this week I was involved in two conversations which powerfully demonstrated this point.

Legibility and evaluation

The first conversation was with Eleanor Williams, Director of the Centre for Evaluation and Research Evidence at the Victorian Department of Health. A group from the Centre for Public Impact met with her to discuss evaluation in complexity.

Through that conversation we agreed that there is a tendency in government to believe that, if only we capture enough evidence – if we gather and crunch enough data, and use those data to measure performance against predefined outcomes – then we’ll know what works, and how to effectively address the challenge at hand.

However, what governments are doing here is imposing a logic of legibility. And the simplifications required to make the illegible legible, mean that this approach is flawed. Toby Lowe explains,

the measures are an abstraction. They are a simplification of the complex, multifaceted nature of real life into a data point. The measures are a pauperised, context-free, superficial substitute for reality.

So, what to do? Do we throw our hands up in despair and never measure or evaluate anything again? Absolutely not. To quote Toby again, “We can’t get better without reflecting on what we do, how we do it, and the relationship between those two things and the effects they create in the world.”

We don’t need to get rid of measurement and evaluation; what we need to do is to shift our approach to what we measure, how we measure and why we measure:

- What we measure: We need to be thinking about how to capture and incorporate different forms of information – quantitative data, stories, emotions, world models and relationships. We should constantly be asking ourselves, what data is missing? Whose voices are missing? What untested assumptions are we making, and how do these obscure other truths? And we need to be reflecting on how our position and power in the world shapes how we gather, interpret and ultimately make decisions using the information we have collected.

- How we measure: Evaluating interventions in complex settings requires a dynamic approach. Methods such as developmental evaluation offer frameworks for evaluating programs in real time, staying in touch with what’s unfolding and responding accordingly, rather than more traditional methods which focus on attempting to control implementation and the evaluation process.

- Why we measure: As my colleague John Burgoyne has written previously, “measurement should not be used for top-down control, but rather to learn about complex problems and the people experiencing them, so we can adapt and improve our approach.”

We need approaches to measurement and evaluation which embrace and work with complexity, rather than obfuscating it through attempts to make complex systems legible.

Legibility in ethics

The second conversation came up as part of a project I’m doing with Lorenn Ruster from the 3A Institute, which is exploring what role the value of dignity plays in shaping government AI Ethics frameworks.

In our regular catch up call, Lorenn was telling me about the work that the Gradient Institute is doing to create quantitative, mathematical representations of fairness that can be incorporated into AI systems to promote fair AI-driven decisions.

While I see the merits of this approach – particularly in the context of where AI tools are making non-supervised decisions, such as whether to approve or reject a loan application – to code fairness seems like another powerful example of trying to make the illegible, legible.

How could you ever, in code, capture enough nuance and complexity to be able to grapple with what constitutes what’s fair, for whom, at any particular moment in time?

It feels important to acknowledge that humans, left to their own devices, are not necessarily better at making “fair” decisions – we know that all sorts of biases inform our decision-making processes. And yet, by virtue of attempting to translate fairness into code, it feels like what sits beneath this is a belief that there is some objective truth to be found around what fairness is, rather than an acknowledgement that fairness is complex, dynamic, and ever-shifting as societal values and norms evolve.

Sitting with illegibility

These attempts to make the illegible legible is not surprising — complex challenges are, by their nature, uncomfortable. However, what governments tend to do when confronted by complex challenges is to deny their complexity, and attempt to make them legible. James C Scott explains:

No administrative system is capable of representing any existing social community except through a heroic and greatly schematized process of abstraction and simplification. It is not simply a question of capacity, although, like a forest, a human community is surely far too complicated and variable to easily yield its secrets to bureaucratic formulae.

This has been part of the mission for Centre for Public Impact, to encourage governments to engage with complexity, rather than pretending it’s not there. Ultimately, this is about supporting governments to think more systemically. To link back to the forest metaphor, instead of cutting down the forest and planting neat rows of trees, governments should be learning about mycorrhizal networks.

Let’s open our eyes. Let’s lean into the reality of not knowing. Because, in the process of relentlessly trying to reduce things to a point where we can know, the beautiful messiness that makes this world unique, dynamic and constantly surprising, is irrevocably diminished.

More practically, too, we encourage governments to accept that not all is knowable, because we believe that attempts to impose legibility onto complex systems will fail and deliver poor results. Only once governments accept that some things are illegible – once they learn to say, ‘I don’t know’ – will they really be able to start effectively engaging with the complex challenges that characterise our world, and our times.

Biography

|

Thea Snow leads the Centre for Public Impacts’s work in Australia and New Zealand. Thea’s experience spans the private, public and not-for-profit sectors; she has worked as a lawyer, a civil servant and, most recently, as part of Nesta’s Government Innovation Team. She also holds an MSc in PPA from the London School of Economics and Political Science. |

Article source: LSE Impact of Social Sciences Blog, CC BY 3.0. Note: This article gives the views of the author, and not the position of the LSE Impact Blog, nor of the London School of Economics. This post originally appeared on the Centre for Public Impact’s Medium page.

Header image source: Steven Kamenar on Unsplash.