In the know: AI and RealKM | ChatGPT as malevolent AI | Rigour in complexity

In the know is a regular roundup of knowledge management (KM) topics of discussion and the articles, events, videos, and podcasts that are grabbing the attention of KM experts across our community.

Artificial intelligence (AI) and RealKM Magazine

With artificial intelligence (AI) identified as one of eight emerging innovative concepts in knowledge management (KM)1, and a recent paper2 exploring opportunities for AI in KM, RealKM’s Bruce Boyes has started to investigate the use of AI in RealKM Magazine‘s production process.

He has been trialling the use of WordTune Read, one of the content summarisers in Fast Company’s recent list of 33 AI tools you can try for free3. To date, he has used WordTune Read to assist in writing three of the most recent articles in the “Open access to scholarly knowledge in the digital era” series and the second article in the “Introduction to knowledge graphs” series. At the end of each of the four articles, Bruce has acknowledged the use of WordTune Read in preparing the article, consistent with the WAME Recommendations on ChatGPT and Chatbots in Relation to Scholarly Publications4 and similar guidance in other academic journals.

Bruce notes that there have been significant mistakes in each of the four summaries initially drafted by WordTune Read, caused by condensing, merging, or truncating sentences in a way that completely changed their meaning. So he has still needed to check and edit the summaries, and to also add author acknowledgements throughout the text.

However, even with needing to do this checking and editing, Bruce reports that due to the assistance provided by WordTune Read, he has needed to spend considerably less time preparing these four articles than previous similar summaries. This time saving is very significant for a small non-profit with limited resources such as RealKM Magazine.

So, Bruce intends to keep using WordTune Read going forward, and to also investigate the use of other potentially suitable AI options. But, based in his experiences, he warns that the outputs of AI cannot be assumed to be completely correct, and so must always be checked.

Should ChatGPT be considered a malevolent AI, and destroyed?

The recently released ChatGPT artificial intelligence (AI) chatbot has attracted massive interest globally, including from members of the knowledge management (KM) community.

A wide range of perspectives have emerged in regard to both the usefulness and desirability of ChatGPT, and some of these views have been documented here in RealKM Magazine.

However, despite the diverse perspectives, there are two things that are not in doubt in regard to ChatGPT, for now at least. One is that at least some of the information that ChatGPT returns is not factual, and the other is that ChatGPT can be manipulated to provide information that is not just incorrect, but inappropriate.

Given this, Alexander Hanff argues in an article5 in The Register that:

Based on all the evidence we have seen over the past four months with regards to ChatGPT and how it can be manipulated or even how it will lie without manipulation, it is very clear ChatGPT is, or can be manipulated into being, malevolent. As such it should be destroyed.

Hanff is a computer scientist and leading privacy technologist who helped develop Europe’s GDPR and ePrivacy rules. ChatGPT told him that he was dead, based on information that included fake links to articles that have never existed.

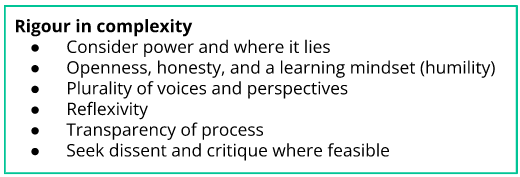

Global conversations on rigour in complexity

The Centre for Public Impact Australia and New Zealand (CPI ANZ) recently hosted global conversations on rigour in complexity6.

The conversations were convened in response to a growing list of people from around the world working in government, academia, and the arts who wanted to discuss the concept of rigour and how we might know whether we are doing rigorous work in complexity.

From the conversations, the following principles have been suggested:

References:

- Schenk, J. (2023). Innovative Concepts within Knowledge Management. Proceedings of the 56th Hawaii International Conference on System Sciences, 4901-4910. ↩

- Jarrahi, M. H., Askay, D., Eshraghi, A., & Smith, P. (2023). Artificial intelligence and knowledge management: A partnership between human and AI. Business Horizons, 66(1), 87-99. ↩

- Newman, J. (2023, February 27). 33 AI tools you can try for free. Fast Company. ↩

- Zielinski, C., Winker, M., Aggarwal, R., Ferris, L., Heinemann, M., Lapeña, J. F., … & Citrome, L. (2023). Chatbots, ChatGPT, and Scholarly Manuscripts-WAME Recommendations on ChatGPT and Chatbots in Relation to Scholarly Publications. Afro-Egyptian Journal of Infectious and Endemic Diseases, 13(1), 75-79. ↩

- Hanff, A. (2023, March 2). Why ChatGPT should be considered a malevolent AI – and be destroyed. The Register. ↩

- Lowther, K. (2023, February 28). A global conversation on rigour in complexity. Centre for Public Impact Australia and New Zealand (CPI ANZ). ↩