Testing the reliability of research data

Originally posted on The Horizons Tracker.

The open science movement has attempted to improve the reliability and reputation of science by ensuring that all data used in scientific research is made publicly available, both for external scrutiny of the data and so that studies can be reproduced.

Whilst the movement is gathering pace, it’s still not the case across the board, especially in certain disciplines that appear to be more reluctant to open up. Whilst most of the time, this reticence is purely down to cultural or practical reasons, but there are also sadly times where it is designed to cover up the use of dubious data.

A recent paper1 describes an AI-based system that is designed to examine data for its reliability. The work, by researchers at the University of Illinois at Urbana-Champaign, is capable of reconstructing all possible data sets that could give rise to the eventual results of the research. All they require is the mean, standard deviation and a few data points.

Data reliability

The system, which they refer to as CORVIDS (Complete Recovery of Values in Diophantine Systems), is designed to test the reliability of the research findings. If it’s able to reconstruct valid data sets, the user can then assess whether this data looks plausible or not.

The system first understands the linear equations from which the statistics being examined are calculated. From this, it then explores all combinations of numbers that can solve these equations. It’s an approach that was first proposed in the third century AD by Diophantus of Alexandria, but suffice to say, he didn’t have the computing power to do the job effectively.

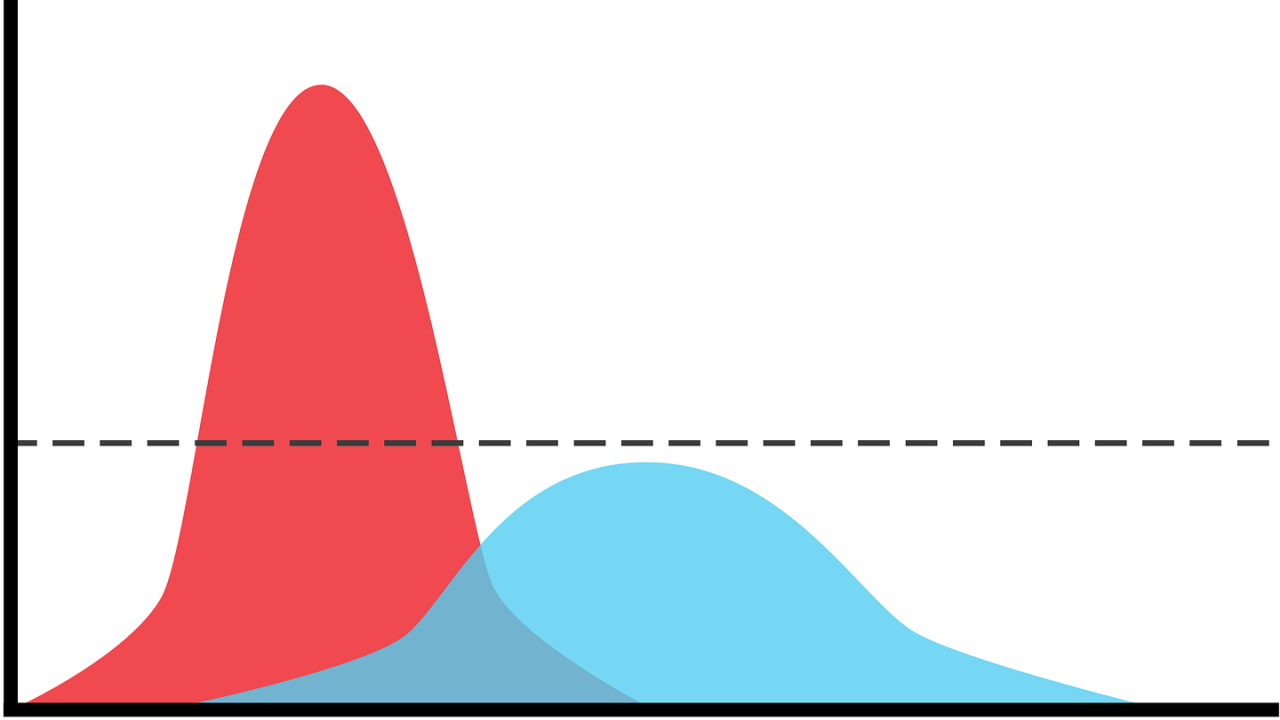

This data is turned into histograms, which are then turned into a three-dimensional chart that makes it easier to spot any unusual patterns. Whilst this makes analysis relatively easy, the process of analyzing the data can take a few hours to run.

The team believe that initially the application will be used by editors and reviewers to identify problems with the papers that are submitted to their journals as early as possible so that they can be discussed with the authors. This is generally a much easier process than submitted the full data set alongside their paper.

The system is unlikely to fix the challenges facing the scientific industry on its own, but is a nice step in the right direction, and will make finding a hiding place for poor science harder to achieve.

Article source: Testing The Reliability Of Research Data.

Header image source: Image 2562325 by StockSnap on Pixabay is in the Public Domain.

Reference:

- Wilner, S., Wood, K., & Simons, D. J. (2018). Complete recovery of values in Diophantine systems (CORVIDS). ↩