Exploring the science of complexity series (part 17): Concept 8 – Adaptive agents

This article is part 17 of a series of articles featuring the ODI Working Paper Exploring the science of complexity: Ideas and implications for development and humanitarian efforts.

Complexity and agency – Concepts 8, 9, and 10

Certain kinds of systems are made up of individual adaptive agents acting for their own purposes, and with their own view of the situation. Such agents can be powerful in shaping the system. A special class of complex systems is made up of adaptive agents (Concept 8), which react to the system and to each other, and which may make decisions and develop strategies to influence other agents or the overall system. The ways in which these actors interact can give rise to self-organised phenomena (Concept 9). And as agents operate in a system, changes in the system and changes in the other actors can feed back, leading to co-evolution of agents and the system (Concept 10).

Concept 8 – Adaptive agents

Outline of the concept

All living things are adaptive agents. Individual people are adaptive agents, so are the teams they work in, and so are organisations. The earth has been described as an adaptive agent in James Lovelock’s Gaia hypotheses1. Some complex systems are said to be adaptive or evolving when individual adaptive agents respond to forces in their environments via feedback. Regardless of size and nature, adaptive agents share certain characteristics, in that they react to the environment in different ways2. Some adaptive agents may also be goal directed; still more may attempt to exert control over their environment in order to achieve these goals. Agents may have goals that can take on diverse forms, including desired local states; desired end goals; rewards to be maximised; and internal needs (or motivations) that need to be kept within desired bounds. They can sense the environment and respond through physical or other behaviours or actions. They may also have internal information processing and decision-making capabilities, enabling them to compare the environmental inputs and their own behavioural outputs with their goals. They may anticipate future states and possibilities, based on internalised models of change (which may be incomplete and/or incorrect); this anticipatory ability often significantly alters the aggregate behaviour of the system of which an agent is part. They may also be capable of abstract self-reflection and internally generated sources of unpredictable conduct3.

Complex systems made of adaptive agents are distinguished by the term complex adaptive systems, and they exhibit a number of specific phenomena, which will be seen in the next two concepts4. The ability of adaptive agents to perceive the system around them and act on these perceptions means that their view of the world dynamically influences, and is influenced by, events and changes within the system.

Detailed explanation

Adaptive agents’ behaviour within a system has been characterised in a number of ways5. Not all adaptive agents exhibit all possible properties – some may be reactive, in that there are units within the system that are capable of exhibiting ‘different attributes in reaction to changed environmental conditions’ 6. Others may also be goal-directed, in that they are reactive and they direct some part of their reactions towards achieving goals (which may be built-in or an emergent phenomenon). Finally, some agents may be planner units, which are reactive, goal-directed and attempt to exert some degree of control over the environment in order to achieve these goals. The terms ‘weak agent’ and ‘strong agent’ are used as terms to describe the different capacities of agents.

First, and foremost, adaptive agents are able to perceive their own state, that of other agents and the state of their environment. This perception process is not always exact – rather, it acts as a filter in which some information about the environment may be discarded and other information distorted or only partially assimilated. In a social setting, agents’ perceptions include the social and cultural context inhabited by the agents, which means that particular environmental context may be perceived differently by different agents.

Agents also have the ability to take action, both in changing their social and natural environments and in interacting with other agents. The conversion of perception into action might not involve a process of internal deliberation. The same perception may not always result in the same action being performed – differences may arise owing to changes in the internal state of the agent.

Agents can retain knowledge of a history of past events, with the ability to learn over time and the potential to make plans for the future. In the case of weak agents, the reaction to the current environment may be influenced by past events in a simplistic way, such that beneficial behaviours are repeated and costly behaviours are not.

More sophisticated agents may have more complex behaviour. For example, so-called strong agents have ‘beliefs’, ‘desires’ and ‘intentions’ that are used to formulate plans for future behaviour. These plans are based both on the current state of the environment and its past history and on the perceived states and history of other agents. The ‘beliefs’ may also have cultural content, so reactions to events can be biased by assumptions about the world. Similarly, ‘desires’ may be conflicting and further complicate an agent’s decision to take action. Between them, the ‘beliefs’ and ‘desires’ lead to the formulation of an ‘intention’ to take action. Unlike weak agents, strong agents are capable of setting and attempting to carry out purposeful, goal-driven behaviour.

Agents interact with each other, potentially in nonlinear ways. This interaction can include observation, communication, physical interaction, spread of disease, imitation of perceived successful behaviour, cooperation to achieve common goals, competition for resources, and hunting, gathering or agricultural practices. Although the response to a given perception may be known beforehand, the interactions between agents mean that the overall behaviour of a system of agents will not, in general, be knowable without running simulations such as agent-based models (which will be covered shortly).

The difference between weak and strong agents plays out here. In the case of reactive agents, most of the emergent larger-scale structure of the system is entirely driven by this interaction. For cognitive agents, while interaction may still be important, their internal perceptions and cognitions play a significant role. Agents can retain a history of interactions with other identifiable individuals, so that, for example, if they make a trade with another agent that later proves to have been unfavourable, they will be less likely to trade with that individual in the future. Furthermore, the representation of realistic networks of social contact is possible, and the effects of this on, for example, formation of opinions, can be investigated.

Finally, agents will generally be diverse in their properties and behaviours. This arises not only because they have divergent properties, such as age, gender and cultural values, but also because their experienced history of the environment and other agents is diverse. Even in a system where the agents begin with the same properties, differences arise over time. On the other hand, there is the possibility of convergence of behaviour and the formation of collective belief through the exchange of information between agents 7. In either case, behaviour will be very different from aggregate average behaviour expected from a set of identical entities in an identical environment.

Where an adaptive agent is part of a system made up of other adaptive agents, it may spend time adapting to the patterns of other agents as well as to the broader system dynamics. By way of example, a policy analyst working in international relations who finds sources of knowledge, ideas and credibility can be seen as an adaptive agent. Groups of such agents can be seen as adaptive agents, generating policy-shifting behaviours, for example. An organisation can be described as an adaptive agent, reacting to the complex stimuli surrounding it. This highlights the point made previously, that complex systems have multiple levels, or hierarchies.

Given the argument that human beings are adaptive agents par excellence, it should be no surprise that social, political and economic life are characterised by complex systems made up of adaptive agents – known as complex adaptive systems. Ideas about such complex systems stem less from the physical sciences and – again, unsurprisingly – more from fields such as biology, ecology, computing and artificial intelligence. This brings the idea of perception, reflection and conscious action into the complexity science perspective. At its simplest, feedback processes occur where actors receive information about the effects of their activities and their environment, so that they can continue doing something if it has the desired effect, or they think it will have the desired effect, and alter their actions if things do not work as planned.

There may be greater dynamism and unpredictability in a system of adaptive agents whose perceptions can influence the system. For example, the existence of adaptive agents leads to feedback processes such as tipping points and self-fulfilling prophecies. Tipping points are essentially bifurcations, described in Concept 7. Malcolm Gladwell suggested that social tipping points are brought about by three kinds of people – or adaptive agents, in complexity terms – who help to create change. These are mavens – or information gatherers, salespeople – those who are good at convincing other people of their point of view, and networkers – who are able to connect with a wide range of people. Gladwell shows how these three kinds of agents can be identified as playing crucial roles in a range of different ‘tipping points’, from the rise in popularity of the hula hoop to the American War of Independence8.

Self-fulfilling prophecies are also worth exploring briefly here. As outlined in Concept 1, world financial markets are an example of a complex, interconnected system with multiple simultaneous feedback loops9. Self-fulfilling prophecies are fundamental to understanding certain market movements. Specifically, when stocks are rising (a bull market), the belief that further rises are probable gives investors an incentive to buy, leading to further rises. At certain points, the increased price of the shares, and the knowledge that there must be a peak after which the market will fall, ends up deterring buyers (negative feedback). Once the market begins to fall regularly (a bear market), some investors may expect further losing days and refrain from buying (positive feedback), but others may buy because stocks become more and more of a bargain (negative feedback). Although this is a simplified model, this basic principle is at the heart of an investment strategy adopted by George Soros called ‘reflexism’.

A number of tools may be used to study the behaviour of adaptive agents in the social realms. Perhaps the most widely utilised is game theory, which codifies the types of decision often facing agents, of how best to fulfil one’s aims and proceed in competitive, or cooperative, environments. This is illustrated by the famous Prisoners’ Dilemma (PD) game10, which has seen wide application within economic and institutional theory. Agent-based modelling (ABM) is a particularly powerful tool which is increasingly being used by complexity theorists. This is outlined in more detail in Box 1.

In addition, social network analysis (SNA) looks at how the interactions between individuals create an overall network with particular properties. SNA uses mathematical models to look at network-wide issues, and can be used to analyse a range of properties of a given network, for example: the extent to which the network is held together by intermediaries; the ability of the network members to access information; the density of the interactions within the network; and the cohesion of relationships into cliques. Each of these aspects of a network might be seen as emergent properties, as they emerge unplanned from the basic interactions of the actors within an organisation (see Concept 3 for more on this).

Box 1: Agent-based modelling (ABM)

ABM is a method which builds artificial social systems ‘from the bottom up’ and provides a powerful analytical tool to model complex, dynamic and highly interactive social processes. It is differentiated from simulations by the fact that the process usually involves detailed empirical insights from real world participant observers. ABM can be used to build model economies or communities that are computer representations having some verisimilitude to real world contexts.

Agent-based models involve three basic ingredients: agents; an environment or space; and rules11. Agents are the ‘people’ of artificial societies. Each agent has internal states and behavioural rules. Some states are fixed for the agent’s life (e.g. agent’s sex, age and vision), whereas others change through interaction with other agents or with the external environment (e.g. individual economic preferences, wealth and cultural identity). The changing nature of states allows ABMs to have ‘non-rational’ agents in model economies or communities. Agents can be heterogeneous; there is no need to rely on representative (homogeneous) agents. The environment is a medium separate from agents, on which the agents operate and with which they interact. These environments can accommodate large numbers of people and different types of people such as consumers, producers and policymakers.

Rules of behaviour are defined for the agents and for the site of the environment. These rules also can be identified as agent-to-environment rules, environment-to-environment rules, and agent-to-agent rules. Agents relate their behaviour to each other’s. The culture of a society or community emerges and changes as a result of individuals’ acts and behaviours. In an ABM framework, through a rule-based learning process, agents acquire new information and develop rules of thumb, norms and conventions. With agent-based methods, human behaviour can be modelled as changing and adaptable, not merely as an outcome reasoned from general propositions. The introspective qualities of agents and cultural factors directly create and change the social processes. The agent is allowed to re-examine his/her own behaviour step by step and thus learns by trial and error. If agent-based simulation results demonstrate similarities with the case study or the real world story, it is rational to argue that the modelling approach possesses important characteristics of the underlying processes.

ABM has been contrasted with the two standard methods of scientific enquiry12 – induction and deduction. Induction is seen as the discovery of patterns in empirical data, whereas deduction involves specifying a set of axioms and proving consequences that can be derived from those assumptions. Agent-based modelling is like deduction, in that it starts with a set of explicit assumptions but it does not then go on to prove theorems. Like induction, it generates simulated data that can be analysed inductively; unlike induction, simulated data come from a rigorously specified set of rules rather than direct measurement of the real world. Whereas the purpose of induction is to find patterns in data and that of deduction is to find consequences of assumptions, the purpose of agent-based modelling is to aid intuition.

Example: Adaptive agents – A geographers view

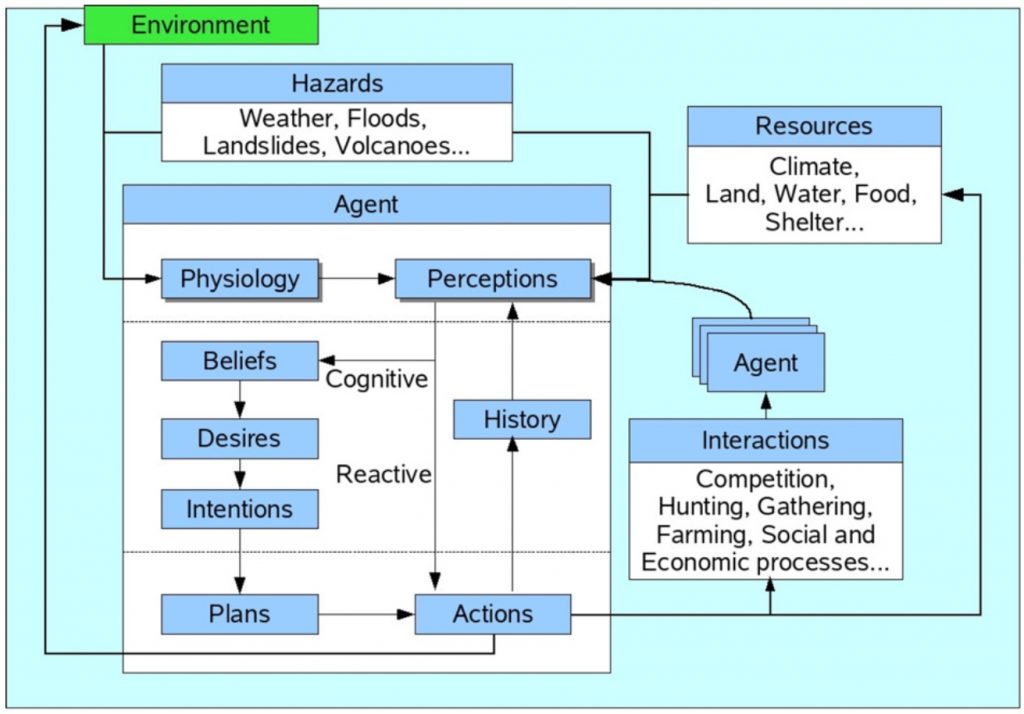

The figure shown below gives an outline view of an agent-based modelling system. Several key points are represented in this diagram. Interactions with the environment take place through direct impact on physiology, gathering of resources and other agent actions. Interactions between different types of agent are crucial to the evolution of the agent-environment system as a whole, encompassing demographics, spread of disease, competition, cooperation and other social processes. The agent’s perceptions of the environment may lead to direct reaction – such as fleeing from predators – or to a cognitive process that allows for complex considerations including history, culture and beliefs, and emotional state. The perceptions need not be complete or accurate – the possibility of inadequate information or bias on views of the world can be allowed for.

The agents are embedded in an environment, with which they interact13. This environment is not restricted in character to the physical world, but might be anything that is not represented using the agents themselves. For example, in models of land-use change, the environment is represented by sub-models for insolation, rainfall, soil moisture, topography and other properties. The environment has a direct impact on an agent’s ability to carry out its functions, and may also provide the resources needed by the agent for its function. Similarly, economic agents may require representation of a marketplace and the facilities to buy and sell goods14.

Implication: Build awareness of influences on adaptive agents, their incentives and relative capacities

The concept of adaptive agents in the aid system emphasises the centrality of human agency in international development and humanitarian work, the ways in which the system inhibits or permits adaptation, and the ways in which adaptation at different levels gives rise to systemic phenomena.

This in turn requires a focus on the lives of a wide range of social actors committed to different strategies, interests and political trajectories. Actor-oriented analysis16 is one way in which this stance has been adopted in the aid world. This focuses on how macro phenomena and pressing human problems result, intentionally and unintentionally, from the complex interplay of specific actors’ strategies, projects, resources, discourses and meanings.

For example, for many outside the humanitarian sector it comes as a surprise that communities in drought-stricken regions actively seek out and use resources, set up various networks of relationships and interactions, and exhibit a number of coping strategies. This notion of adaptive capabilities of communities may be particularly relevant for how international humanitarian assistance is designed and implemented – estimates suggest that ‘no more than 10% of survival in emergencies can be contributed to external sources of relief aid’ 17.

A number of tools may be used to study the behaviour of adaptive agents in the aid world. Agent-based modelling, as described in Box 1, is potentially very useful. One of the most powerful applications to date has been in the field of epidemiology. The Swiss have made widespread use of ABM in epidemiology to model the propagation of disease – e.g. the Swiss Tropical Institute and malaria.

Given the potentially complex nature of the international aid system, it is important to understand the role of clusters of actors, or networks. One method of understanding these is SNA, which looks explicitly at the structure of the network resulting from these relationships and interactions. SNA offers insights through determining the resilience of networks, the level of connectedness between key agents, and the formation of cliques. SNA uses mathematical principles which – it is argued – are employed ‘intuitively by people trying to solve complex problems which involve many relationships between many things’ 18. An important factor here is to grasp that the network has properties as well as parts, and to analyse those properties that arise from the interactions between the network members. This resonates in many ways with the notion of emergence.

Given the web of relations and interactions among various communities, institutions and countries that characterises the aid world, such approaches have the potential to bring about a shift in perspective to one that emphasises the importance of understanding relationships and behaviours. Some initial movement towards this attitude can be perceived. For example, it has been suggested that development projects should regard ‘relationships management as important as money management’ 19. Rather than seeing aid as an outside force or ‘catalyst’ acting on a developing country, which can intervene without itself being affected, aid agencies should be ‘ready to be influenced by local actors’ and ‘examine more closely with whom it relates and which relationship networks it supports’ 20.

Adaptive management practices, which seek to make maximum use of the capacities of individuals working on a project or programme, can also be a useful approach – the overall goal being to learn by experimentation in order to determine the best management strategies in a given setting. Although it is being used in some settings, it is worth more exploration among aid agencies.

The behaviour of adaptive agents is at the heart of a planning, monitoring and evaluation tool called outcome mapping. This methodology, which incorporates an appreciation of complex systems, has as its primary focus the changes in behaviour (defined as actions, attitudes and relationships) of those stakeholders with whom a programme or project interacts directly. These direct stakeholders are referred to as boundary partners in the outcome mapping terminology. The ideal application of the method requires members of an aid programme or project to work with these actors in order collectively to specify hoped-for changes and to identify specific activities that will help contribute to these changes.

One of the critiques levelled at complexity science is that there is no real way of dealing with power. However, in the aid context, the concept of adaptive agents enables understanding of how certain agents may act in order to withhold or suppress the adaptive capacities of others. To cite a few: specific groups who are excluded or marginalised in communities, rural producers who are at the mercy of wholesalers, local NGOs who are at the behest of international agencies, developing country officials who are excluded from trade negotiations. The list goes on – the aid system is full of examples of strong and weak agents that interact in ways to maintain certain kinds of power balances. There are further implications of power and adaptive agents in complex systems, in terms of how their capacity to act together in groups (self-organisation, see Concept 9) and how they evolve in relation to each other and the system (co-evolution, see Concept 10).

Next part (part 18): Concept 9 – Self-organisation.

Article source: Ramalingam, B., Jones, H., Reba, T., & Young, J. (2008). Exploring the science of complexity: Ideas and implications for development and humanitarian efforts (Vol. 285). London: ODI. (https://www.odi.org/publications/583-exploring-science-complexity-ideas-and-implications-development-and-humanitarian-efforts). Republished under CC BY-NC-ND 4.0 in accordance with the Terms and conditions of the ODI website.

Header image source: qimono on Pixabay, Public Domain.

References and notes:

- Gribbin, J (2004). Deep Simplicity: Chaos, Complexity and the Emergence of Life. London: Allen Lane ↩

- ISCID (2005). Encyclopaedia of Science and Philosophy. ↩

- Harvey, D. (2001). ‘Chaos and Complexity: Their Bearing on Social Policy Research’, Social Issues, 1(2). ↩

- These kinds of elements are also sometimes described simply as agents, and systems of such agents are also sometimes referred to by some as complex evolving systems (Mittleton-Kelly, E. (2003). ‘Ten Principles of Complexity and Enabling Infrastructures’ in Complex Systems and Evolutionary Perspectives of Organisations: The Application of Complexity Theory to organizations, London: Elsevier Press.), owing to the fact that from the mass of interactions and adaptations between entities a process of evolution (and co-evolution) emerges. ↩

- Bithell, M, Brasington, J. and Richards, K. (2006). ‘Discrete-element, individual-based and agent-based models: Tools for interdisciplinary enquiry in geography?’, Geoforum (2006), doi:10.1016/j.geoforum.2006.10.014 ↩

- See Syllabus of Readings Complex Adaptive Systems and Agent-Based Computational Economics. ↩

- Doran, J. E. (1998). ‘Simulating Collective Misbelief’, Journal of Artificial Societies and Social Simulation 1(1). ↩

- Gladwell, M. (2000). The Tipping Point: How Little Things Can Make a Big Difference, Boston: Little, Brown. ↩

- Arthur, W.B (1990). ‘Positive Feedbacks in the Economy’, Scientific American 262 (February): 92–9; among others. ↩

- Gillinson, S. (2004). Why Cooperate? A Multi-Disciplinary Study of Collective Action, Working Paper 234, London: ODI. ↩

- Epstein, M. and Axtell, R. (1996). Growing Artificial Societies: Social Science from the Bottom Up, Cambridge, MA: the MIT Press. ↩

- Axelrod, R. (1997). ‘Advancing the Art of Simulation in the Social Sciences’, Complexity, 3(2): 16–22. ↩

- Russell, S. and Norvig, P. (2003). Artificial Intelligence: A Modern Approach, London: Prentice Hall. ↩

- Tesfatsion, L. (2002). ‘Agent-based computational economics: Growing economies from the bottom up,’ Artificial Life 8:55–82. ↩

- Bithell, M, Brasington, J. and Richards, K. (2006). ‘Discrete-element, individual-based and agent-based models: Tools for interdisciplinary enquiry in geography?’, Geoforum (2006), doi:10.1016/j.geoforum.2006.10.014 ↩

- Long, N. (2002). ‘An Actor-oriented Approach to Development Intervention’, Background Paper prepared for APO Meeting. Tokyo, 22–26 April. ↩

- Hilhorst, D. (2003). ‘Unlocking Disaster Paradigms: An Actor-oriented Focus on Disaster Response’, Abstract submitted for Session 3 of the Disaster Research and Social Crisis Network Panels of the 6th European Sociological Conference, Murcia, Spain, 23–26 September. ↩

- Johnson, J. (1995). ‘Links, Arrows and Networks: Fundamental Metaphors in Human Thought’ in Batten, D., Casti, J. and Thord, R. Networks in Action, Berlin: Springer Verlag. ↩

- Eyben, R. (ed.) (2006). Relationships for Aid, London: Earthscan. ↩

- Eyben, R. (ed.) (2006). Relationships for Aid, London: Earthscan. ↩