The disastrous effects of opinion-based decisions, and how knowledge management can be better evidence-based

This article is part of an ongoing series of articles on evidence-based knowledge management.

In an article in The Oxford Review, David Wilkinson gives a heartbreaking example of the devastating effects of opinion-based decisions:

LuAnn was a 13 year old student in North Carolina who was displaying extreme learned helplessness … When the researchers investigated her background and home-life [they] found a number of issue which appeared to lead to this state. The first of which was that from an early age, if she wet the bed her mother would punish her by spanking her. Her mother believed that the bed wetting behaviour was actually attention seeking behaviour and needed to be punished in order to stop it.

From the mother’s point of view this all made sense and led to the decision to spank LuAnn for wetting the bed from an early age. In fact this decision only made the situation worse.

This is how a world view or set of beliefs lead to actions which appear to be very logical from that system of thinking. This is an example of opinion-based decision-making.

He goes on to discuss the role of confirmation bias in opinion-based decision-making:

Once we have a belief about something our brains starts to actively filter for evidence that our belief is correct … This is the issue with opinion-based decisions. The moment we make up our mind that something is a certain way our brains start to look for the evidence to confirm it. The problem is at the same time it also neatly discards any evidence to the contrary. In effect, a belief quickly becomes reality as we gather more and more evidence that the belief is true.

Wilkinson then advises that the counter to opinion-based decision-making is evidence-based decision-making:

Evidence-based decisions … are of an entirely different nature. They are based on a research foundation. This is to say that they are based on testing not confirming evidence.

However, decision-making that is based on little or no evidence is unfortunately widespread in organisations and government agencies. We’ve discussed a number of examples in previous RealKM Magazine articles, including Net Promoter Score, nudge management, predictive algorithms, team building events, and the use of social network sites in the workplace.

What about the knowledge management (KM) discipline? As Stephen Bounds alerts, as do others, the KM discipline has a mixed track record in regard to evidence-based decision-making. This means that KM is now falling behind the overall field of management and also other management disciplines such as HR.

KM discussion forum comments, such as those reviewed by Stephen Bounds, reveal that some opinion-based decision-making occurs in KM. Also evident is a tendency for people working in KM to rely heavily on their professional expertise, to the exclusion of other sources of evidence, in particular research findings. The confirmation bias discussed by Wilkinson plays a role in this. As Stephen Bounds advises, “Unfortunately we have become complacent about assuming value of our techniques without searching for how that value may be realised in practice.”

When research findings are used in KM, it’s evident that the quality and relevance of studies is often not being adequately appraised. As we show in our quality of science and science communication series, a disturbing amount of poor quality research has been published, so care needs to be taken to use only trustworthy studies. Research is also done at a particular point in time and in a specific context, but circumstances can change over time and what is relevant in one context may not be appropriate in another. I recently discussed an example of this.

Moving KM to a better evidence-based foundation

Moving the KM discipline to a better evidence-based foundation is actually quite straightforward. What’s needed is for everyone working in KM to understand the fundamentals of:

- why we need evidence-based KM

- what we need to do to implement evidence based KM – the four sources and six steps

- how to appraise the quality of evidence.

A good grounding in these fundamentals can be gained from reviewing the following introductory materials, many of which have been produced by the Center for Evidence-Based Management (CEBMa):

1. Introductory video

An excellent introduction to the what, why, and how of evidence-based management can be found in the CEBMa video above (which is also accessible through this direct link). The video is aimed at a HR audience but is equally relevant to KM.

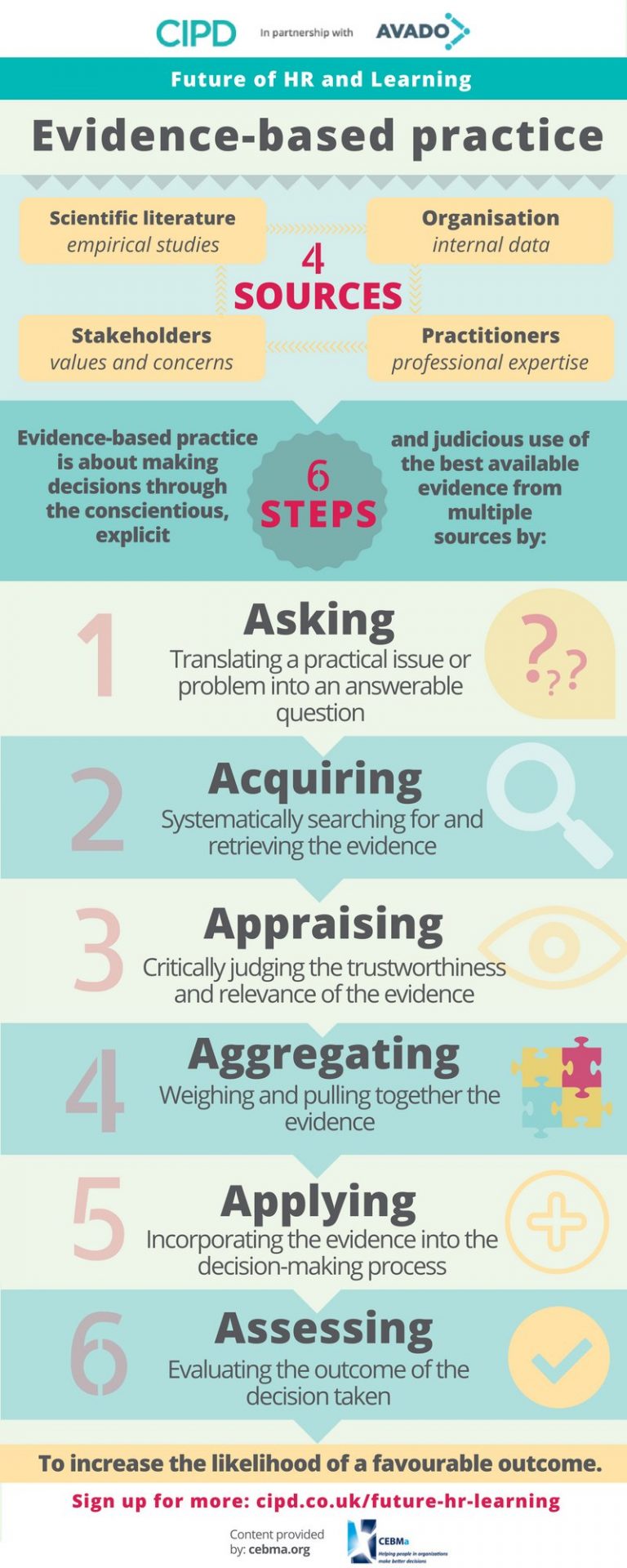

2. Evidence-based practice infographic and paper

The four sources and six steps of evidence-based management are summarised in the infographic below, and further information can be found in the concise 20-page CEBMa paper1 Evidence-Based Practice: The Basic Principles.

The four sources of evidence as described in the paper are:

- The scientific literature. The first source of evidence is scientific research published in academic journals. To be able to include up-to-date evidence from the scientific literature in your decision-making, it is essential to know how to search for studies and to be able to judge their trustworthiness and relevance.

- Evidence from the organisation. A second source of evidence is the organisation itself. Organisational evidence comes in many forms, for example financial data, operational data, or client and employee feedback.

- Evidence from practitioners. A third source of evidence is the professional experience and judgement of managers, consultants, business leaders, and other practitioners. Professional experience is not intuition, opinion, or belief. Rather, it is the specialized knowledge acquired through repeated experience and reflection over time.

- Evidence from stakeholders. A fourth source of evidence is stakeholder values and concerns. Stakeholders are any individuals or groups who may be affected by an organisation’s decisions and their consequences. They can be internal or external to the organisation.

3. Evidence appraisal guide and mobile phone application

The quality and relevance of research evidence varies greatly, so it needs to be critically appraised to judge its trustworthiness, value and relevance in a particular context. To assist with this, the CEBMa has prepared a critical appraisal guide and mobile phone application.

4. Levels of evidence guide

It is important to be able to determine which evidence is the most authoritative. So-called ‘levels of evidence’ are used for this purpose, and specify a hierarchical order for various research designs based on their internal validity. To assist with this, the CEBMa has prepared a levels of evidence guide.

5. Systematic review and evidence synthesis paper and Meta-analysis Wikipedia page

As discussed in this paper2, systematic review and evidence synthesis assists the acquisition and analysis of evidence. Systematic review “can help address managerial problems by producing a reliable knowledge base through accumulating findings from a range of studies.” Evidence synthesis is done as part of a systematic review, and there are a range of approaches to carrying out the synthesis.

A systematic review is better than a traditional literature review:

A systematic review addresses a specific question, utilizes explicit and transparent methods to perform a thorough literature search and critical appraisal of individual studies, and draws conclusions about what we currently know and do not know about a given question or topic. Systematic simply means that reviewers follow an appropriate (but not standardized or rigid) design and that they communicate what they have done. In essence, conducting systematic reviews means applying the same level of rigor to the process of reviewing literature that we would apply to any well-conducted and clearly-reported primary research.

Making decisions on the basis of a traditional literature review is better than making them on the basis of an individual paper, but making them on the basis of a systematic review is better again. Because of this, I use systematic reviews as the basis of my RealKM Magazine articles wherever possible, meaning that you can in turn use these articles to assist your evidence acquisition (if I’ve used a systematic review, this will be clearly stated in the article text).

A meta-analysis is a statistical analysis that combines the results of multiple scientific studies, and is often carried out as part of a systematic review process.

6. Open access publishing information

As the video above acknowledges, a serious impediment to evidence-based decision-making is that a large proportion of research evidence is locked away behind academic journal paywalls. Many people working in the KM discipline would not have ready access to full-text journal articles, making in very difficult for them to use this evidence in their decisions.

While there’s a growing trend towards open access publishing, there’s still a long way to go, with only around one-third of scholarly literature currently being open access. As I’ve previously alerted, publishing KM research in paywalled journals runs counter to the practice of knowledge sharing, which a fundamental aspect of KM. Because of this, people working in the KM discipline should strongly support open access publishing.

7. Further information

Once you’ve digested these introductory materials, you can visit the Center for Evidence-Based Management resources and tools page for a wide range of more detailed information including papers, presentations, and teaching materials. The resources are provided under a Creative Commons license.

References:

- Barends, E., Rousseau, D. M., & Briner, R. B. (2014). Evidence-based management: The basic principles. Amsterdam: Center for Evidence-Based Management. ↩

- Briner, R. B., & Denyer, D. (2012). Systematic review and evidence synthesis as a practice and scholarship tool. Handbook of evidence-based management: Companies, classrooms and research, 112-129. ↩

Also published on Medium.