Lessons Learned Part 14: Maintenance

This article is part 14 of a series of articles exploring the Lessons Learned Life Cycle.

Indeed he knows not how to know who knows not how to unknow. – Sir Richard Francis Burton

Maintenance of a lessons learned system has two facets: maintenance of the content to ensure that it is up to date and relevant, and maintenance of the system itself, using feedback from users to modify the capture and dissemination processes in an effort to increase learning. Content maintenance is sometimes viewed as “organizational forgetting,” in that it requires organizations to “unlearn” what they had previously learned. Content maintenance is a conscious, intentional forgetting that can lead to increased competitiveness, rather than the accidental forgetting that comes with a poor lessons learned process.

Just as with learning, organizational unlearning is more than just a modification to a database. Successful learning implies that the lesson has been deeply embedded into the fabric of the organization, which makes it all the more difficult to excise such a lesson from organizational memory when circumstances change and it becomes obsolete. Therefore from a lesson maintenance standpoint, it may make sense to rethink the concept of unlearning.

The unlearning of any given lesson is unlikely to occur unless a new lesson is discovered that supersedes it. In this view, the organization is not unlearning old lessons so much as learning new ones, which allows the focus of the lessons learned system to remain on learning. In addition, this approach acknowledges that lessons are not discrete units to be learned or unlearned in a binary fashion, but that there is a spectrum of learning. Lessons may be refined, modified, and added to, as well as being completely replaced. In every case the framework is that of adding knowledge, not subtracting it.

Here again, knowledge intermediaries play an important role in scanning the lesson repository as new lessons come in to ensure there are no conflicts. It may be possible to automate this conflict resolution, but such a system will need to be extremely user-friendly to successfully replace human intermediaries, given that these intermediaries often exist as a result of lack of time or training on the part of knowledge producers and consumers.

The second aspect of maintenance involves the monitoring and improvement of the lessons learned system itself. This presumes that there is some method for quantifiably measuring the performance of the system. There is no precise way to quantify success, but a variety of measures, all having their own weaknesses, can be used to paint an overall picture of the health of a lessons learned system:

- Activity can be measured in the form of number of searches on a database, hits to a web site, requests to intermediaries for assistance, etc. While activity measurements do not guarantee learning, they are at least an indicator of the acceptance and use of the system.

- Employee surveys can be conducted to get opinions on the usefulness of the system. Again, an opinion survey does not guarantee that learning is taking place, but it will uncover any difficulties with regard to user acceptance.

- The content of the repository can be evaluated for its value to the business. Lessons can be simply counted, capture rates can be calculated, or lessons can be weighted based on their alignment with business goals. Anecdotes and testimonials can be collected and analyzed to assess cost savings or revenue generation impacted by the lessons. These anecdotes can also be useful as stories to encourage more participation in the system.

- The concept of learning curves has been used for many years to measure productivity improvements in organizations. Typical learning curves plot production against time: pizzas delivered per day, hours per vehicle assembly, etc. This same approach could be used in areas where mistakes are tracked: problems logged per development project, customer complaints per month, etc. Reduction in errors is evidence that lessons are being learned.

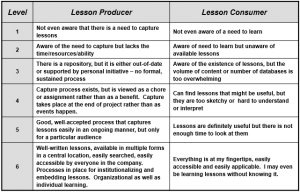

It has become popular in recent years to propose maturity models for various disciplines, and knowledge management is no different. While these maturity models tend to assess the health of an organization’s knowledge management processes in broad terms, it might also be useful to track the progress of a lessons learned system in terms of the maturity of its primary constituents – knowledge producers and consumers. Here’s my attempt at such a model:

Through the use of such a model, it may be possible to survey the organization to not only assess its overall health, but also to identify areas of poor learning that can be targeted for improvement.

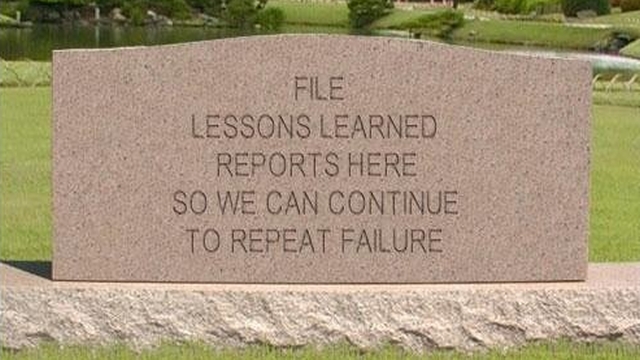

In any event, it’s important to have a strategy for maintaining and periodically cleaning up your lessons learned repository. Otherwise you will eventually encounter:

- lessons that are misapplied

- lessons that are no longer relevant and just clutter up search results

- lessons that contradict each other.

Other articles in this series: This article (part 14) is the final article in the Lessons Learned Life Cycle series. For the previous articles in the series, please visit the series page.

Article source: Lessons Learned Part 14: Maintenance.

Header image source: Alexas Fotos on Pixabay, Public Domain.

References and further reading:

- Linda Argote, “Organizational Learning Curves: An Overview“

- Michael Hine and Michael Goul, “The Design, Development, and Validation of a Knowledge-Based Organizational Learning Support System“

- Pablo de Holan, et. al., “Managing Organizational Forgetting“

- Manfred Langen, “Holistic Development of KM within the KM Maturity Model“